How Scammers Are Using AI to Supercharge Scams

Author: James Greening

Artificial intelligence is changing the way scams are created, scaled, and delivered. Tools like image generators, chatbots, and voice synthesizers are now being used to impersonate people, automate phishing messages, and produce fake products that do not exist. With minimal input and almost no cost, scammers can now produce convincing material in seconds.

According to the Global State of Scams 2024 report by the Global Anti-Scam Alliance (GASA), many scam victims believe AI was used in scam messages they received, particularly via text, chat, or voice. Thirty-one percent were uncertain, and 16 percent said AI was not involved.

Nearly half of all scams are now completed within 24 hours, a pace driven in part by the speed and automation that generative AI enables. Scam websites, phishing emails, fake endorsements, and impersonation calls can all be created, deployed, and scaled in a fraction of the time it once took.

In this article, we look at how scammers are already using AI in ways that are easy to miss, and what you can watch out for to avoid falling for them.

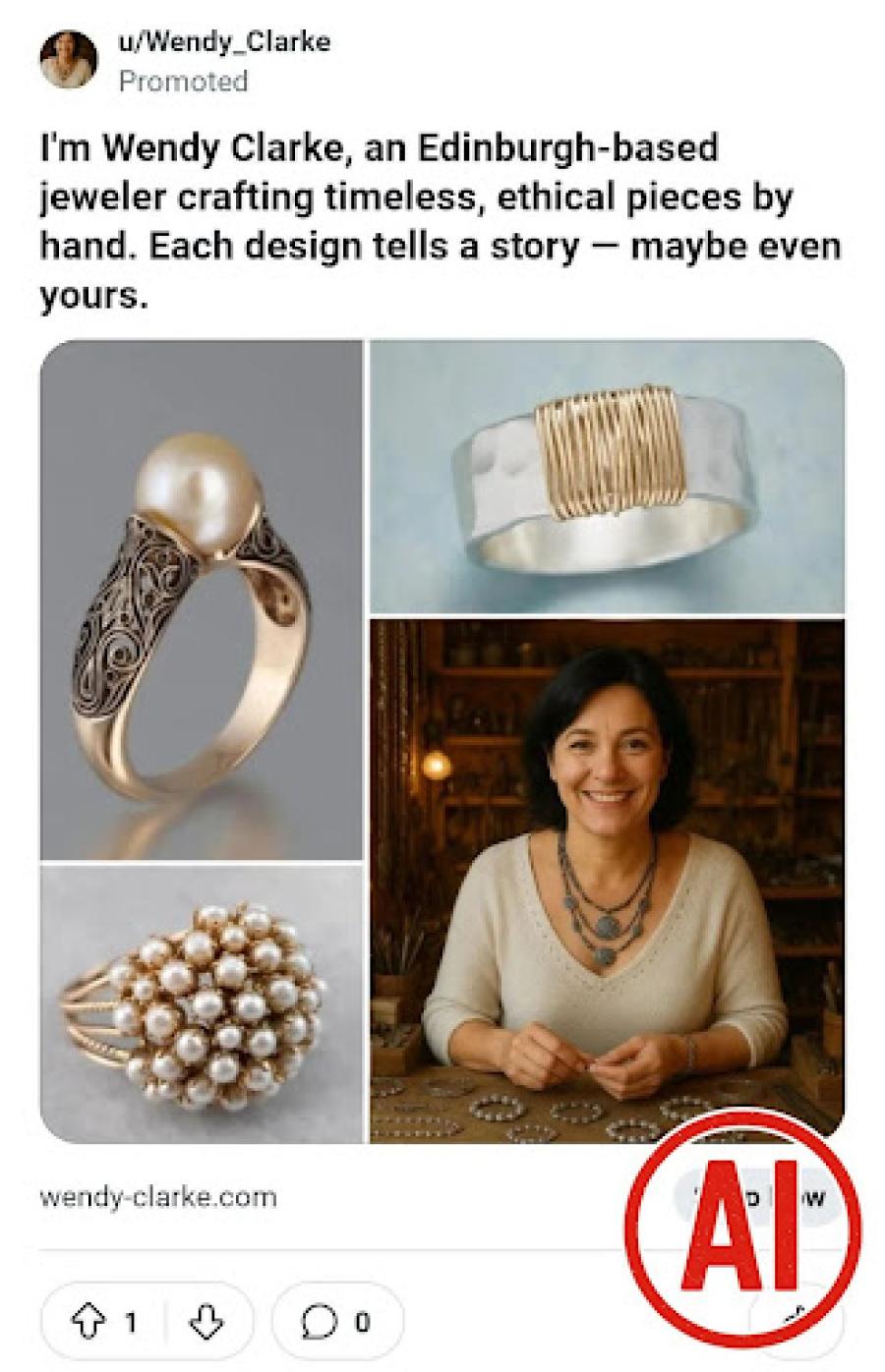

Fake online shops using AI-generated imagery

AI image generators have made it easy to produce original product photos that appear realistic and professionally shot. Scammers no longer need to steal images from other sellers. Instead, they generate new visuals featuring clothing, electronics, or furniture that look appealing but do not actually exist.

These images are used to build fake online stores and run adverts on social media. While the visuals appear polished, closer inspection often reveals distorted branding, odd proportions, warped text, or unnatural lighting. Victims are lured in by heavy discounts and promotional urgency. After payment, they typically receive nothing or a counterfeit product.

Phishing emails written by language models

Phishing emails have long relied on urgency and impersonation, but their language often gave them away. That is no longer the case. AI-powered writing tools can now create phishing messages that sound natural and match the tone of legitimate institutions.

Scammers can quickly generate realistic-looking emails that mimic messages from banks, government services, or well-known companies. They can also adjust the wording depending on the season or location, such as tax filing reminders or parcel delivery notices. These messages often lead to fake login pages or prompt recipients to download malware.

Voice cloning used in impersonation scams

With just a short audio clip, scammers can now generate synthetic voices that sound like real people. This technology is increasingly being used in impersonation scams.

Some scams involve calls where the voice sounds just like a loved one in distress. The FTC has warned that scammers can now clone voices using AI, making family emergency scams even harder to detect. With just a short audio clip, scammers can create a voice that sounds real enough to trick people into thinking a relative is in trouble.

This same technology has already been used in business scams. In one case, a UK energy company transferred over $243,000 after receiving a call that mimicked the voice of its CEO.

Deepfake videos in fake investment schemes

Video-based scams are also evolving. Deepfake technology allows scammers to create fake videos where well-known individuals appear to promote investment platforms or giveaways. These videos are often used to target social media users or appear in online adverts.

Scammers have used deepfakes of Elon Musk, Anthony Bolton, and Taylor Swift to promote fraudulent crypto platforms and investment schemes. These videos are usually paired with “limited-time” language and scam WhatsApp groups. A real example of this tactic is visible in this video shared by ScamAdviser, where a hacked livestream was used to push a Musk-themed crypto scam.

How to spot AI-driven scams

AI-generated visuals often contain physical inconsistencies. Look for warped fabric, mismatched reflections, lighting errors, or missing logos in adverts or product photos. If something looks too polished but lacks a traceable origin, be sceptical.

Messages created by AI can sound overly polished or generic. Scams that reference no personal context or use phrasing that feels slightly off are worth second-guessing, especially when coming from people you know.

Deepfake videos and audio may reuse familiar scripts or seem emotionally flat. If a livestream or ad shows a celebrity endorsing an investment scheme, search their verified social media before believing or sharing it.

Bottom Line: Staying alert

Generative AI is making scams faster, more scalable, and harder to detect. The signs that once gave them away – poor grammar, stolen images, awkward phrasing – are disappearing.

But there are still ways to spot the difference. Unfamiliar links, urgent payment requests, and out-of-character messages from public figures or loved ones should all be treated with suspicion. But by slowing down, checking details, and thinking critically about what you're seeing or hearing, you can avoid getting caught in the rush.

Report a Scam!

Have you fallen for a hoax, bought a fake product? Report the site and warn others!

Scam Categories

Help & Info

Popular Stories

As the influence of the internet rises, so does the prevalence of online scams. There are fraudsters making all kinds of claims to trap victims online - from fake investment opportunities to online stores - and the internet allows them to operate from any part of the world with anonymity. The ability to spot online scams is an important skill to have as the virtual world is increasingly becoming a part of every facet of our lives. The below tips will help you identify the signs which can indicate that a website could be a scam. Common Sense: Too Good To Be True When looking for goods online, a great deal can be very enticing. A Gucci bag or a new iPhone for half the price? Who wouldn’t want to grab such a deal? Scammers know this too and try to take advantage of the fact. If an online deal looks too good to be true, think twice and double-check things. The easiest way to do this is to simply check out the same product at competing websites (that you trust). If the difference in prices is huge, it might be better to double-check the rest of the website. Check Out the Social Media Links Social media is a core part of ecommerce businesses these days and consumers often expect online shops to have a social media presence. Scammers know this and often insert logos of social media sites on their websites. Scratching beneath the surface often reveals this fu

So the worst has come to pass - you realise you parted with your money too fast, and the site you used was a scam - what now? Well first of all, don’t despair!! If you think you have been scammed, the first port of call when having an issue is to simply ask for a refund. This is the first and easiest step to determine whether you are dealing with a genuine company or scammers. Sadly, getting your money back from a scammer is not as simple as just asking. If you are indeed dealing with scammers, the procedure (and chance) of getting your money back varies depending on the payment method you used. PayPal Debit card/Credit card Bank transfer Wire transfer Google Pay Bitcoin PayPal If you used PayPal, you have a strong chance of getting your money back if you were scammed. On their website, you can file a dispute within 180 calendar days of your purchase. Conditions to file a dispute: The simplest situation is that you ordered from an online store and it has not arrived. In this case this is what PayPal states: "If your order never shows up and the seller can't provide proof of shipment or delivery, you'll get a full refund. It's that simple." The scammer has sent you a completely different item. For example, you ordered a PlayStation 4, but instead received only a Playstation controller. The condition of the item was misrepresented on the product page. This could be the